How to Scrape Job Posting Data Using Python and BeautifulSoup?

Job posting data scraping is important for recruiters, researchers and job seekers. In 2025, web scraping tools and techniques have revolutionized significantly, offering more efficient and robust solutions for gathering job data. This blog will help you understand the steps to scrape job posting data while considering ethical and legal compliance.

What is Job Posting Data Scraping Services?

The automatic gathering of job postings and associated data from job boards, career websites, and business websites is known as "job scraping." Details like job titles, firm names, locations, pay, job descriptions, necessary qualifications, and more may be included in this data. Job scraping's main objective is to rapidly and effectively collect big datasets so that companies, researchers, and recruiters may stay abreast of the most recent developments in the labor market.

How Various Industries Utilize Job Data?

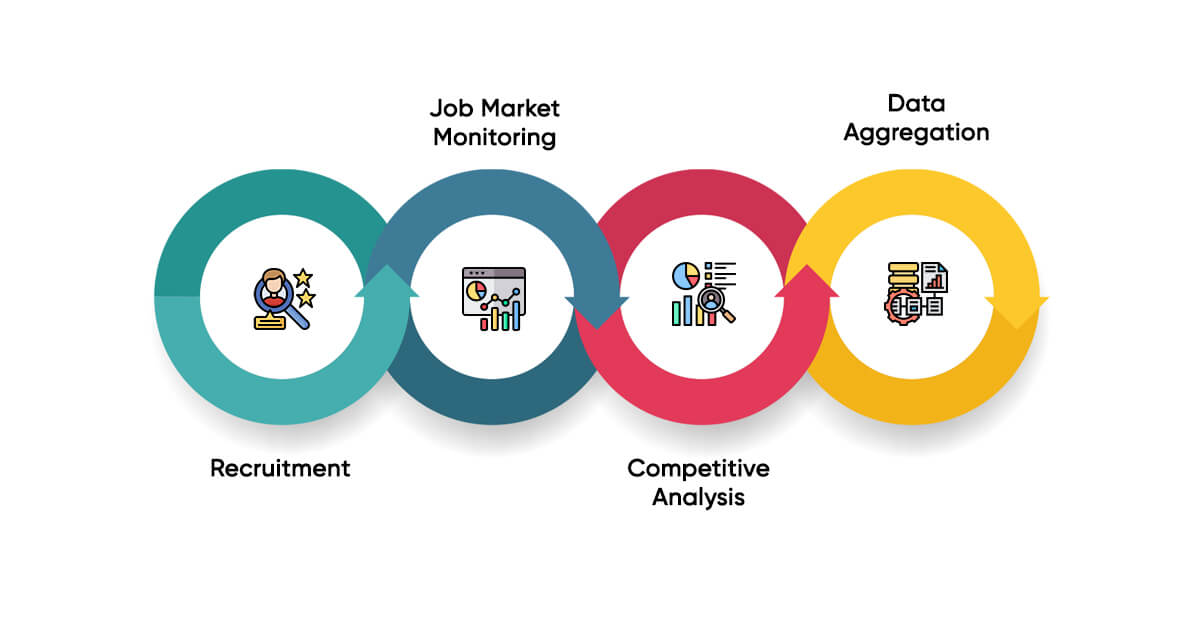

Job data scraping is the process of gathering job data by "crawling" web sites using a web scraping bot or scraping tool. After that, data is utilized for several reasons, including:

Recruitment

Locating positions that fit specific roles or skill sets.

Job market monitoring

It is the process of determining trends such changes in industry hiring practices, demand for talents, and salary variations.

Competitive Analysis

Analyzing competitors' recruiting practices is known as competitive analysis.

Data aggregation is the process of gathering employment information for studies, job boards, search engine, or employee market surveys.

What is the Importance of Job Scraping for Business and Recruitment Agency?

For companies in industries like workforce analytics, HR technology, and recruitment, job scraping has grown more and more important. Below mentioned are few justifications for its significance:

Efficiency

Compiling job listings by hand requires a lot of work and time. This procedure is automated by job scraping, which frees up important resource.

Real-time Data

Scraping tools give users access to the most recent data by gathering new job posts multiple time in a day.

Data-Driven Insights

Employers and recruiters can make better decisions by gathering information about the labor market, including changes in salaries and the demand for particular talents.

Global Reach

By gathering job postings from various industries and geographical areas, job scraping enables businesses to get a more complete picture of the world labor market.

What are the Legal and Ethical Guidelines and Considerations for Job Scraping?

The location, method, and type of data being scraped determine the legality of job scraping. The terms of service (ToS) of many websites expressly forbid scraping without authorization. Legal repercussions, including as cease-and-desist orders, account suspensions, and even lawsuits, may result from breaking these rules.

Terms of Service Restrcitions

Scraping is strictly forbidden by the ToS of several websites, including Indeed, LinkedIn, and others. Businesses must review these conditions before engaging in any scraping.

Data Privacy Law

Strict rules on gathering and keeping personal data are outlined in the California Consumer Privacy Act (CCPA) and the General Data Protection Regulation (GDPR) of the European Union. If a job scraping tool unintentionally gathers personal information it could lead the company with the risk of violating laws of privacy.

When scraping job data, businesses must consider ethical principles in addition to legal considerations:

Observe Website Policies

Businesses should abide by website policy and refrain from using aggressive scraping methods that overwhelm servers, even if the law permits it.

Transparency

If data scraping entails gathering personal information, companies should be open and honest regarding how that information will be used and make sure they have permission when needed.

Data Quality Over Quantity

To reduce waste and prevent drawing false conclusions, organizations should concentrate on gathering high-quality, pertinent data rather than scraping enormous volumes of useless data.

What are the Best Practices and Guidelines for Extracting Job Data?

Respect ToS

Make sure you are always aware of and abide by the terms of service of the websites that you are looking to scrape.

Use Data Responsibly

Don't gather personal information unless it's absolutely necessary and permitted by laws.

Restrict Scraping Frequency

Place restrictions on how many requests can be made in a specific time frame to prevent overloading the source server.

What are the Steps to Extract Job Data using Python and BeautifulSoup

Check out the steps that will help you to easily extract job board data:

Defining your Goals

Before initiating the scraping process, identify the purpose of your scraping. Do you want to monitor salary, job descriptions, or skills?

Select Targeted Website

Choose popular job boards from which you are looking to extract data including LinkedIn, Indeed, Glassdoor, etc.

Check out the below code of Python and BeautifulSoup using which we will extract job data:

Python

import requests

from bs4 import BeautifulSoup

url = "https://example-job-board.com/jobs"

headers = {"User-Agent": "Your User-Agent"}

response = requests.get(url, headers=headers)

soup = BeautifulSoup(response.content, "html.parser")

# Extract job postings

for job in soup.find_all("div", class_="job-listing"):

title = job.find("h2").text

company = job.find("div", class_="company").text

location = job.find("div", class_="location").text

print(f"Title: {title}, Company: {company}, Location: {location}")

Handle Dynamic Content

Several latest job boards use JavaScript to load data. Also, use tools like Selenium and Puppeteer to extract dynamically loaded content.

Store and Analyze Data

Save the data in structured format including CSV, JSON, or a database for future monitoring.

What are the Challenges in Scraping Targeted Websites?

Compared to scraping from a centralized network like Indeed or LinkedIn, extracting job listings from several websites is more complicated. The following are some difficulties you may face:

Variations in Website Structure

Because every website is different, scraping techniques must be tailored in respect to website. A scraping script made for a typical HTML page will not function properly to a website that employs JavaScript or AJAX for dynamic loading.

Bot Detection Systems

To stop automated scraping, many websites employ CAPTCHA, honeypot fields, or several other bot detection methods. Advanced strategies like proxy rotation, CAPTCHA solving service, and headless browsers that mimic human browsing activity are frequently needed to get around these measures.

Rate Limits and Ban

Several websites have a limit on how many requests from a single IP addresses they can process in a little amount of time. A scraper may be prohibited from using the website if it goes above these restrictions. Scrapers must leverage proxy services to distribute queries across several IPs and employ intelligent scheduling to avoid this.

Maintain Data Accuracy

As new job postings are made and outdated ones are taken down, job data is updated continuously. This implies that regular scraping is necessary to guarantee that the data gathered is correct and current. Furthermore, websites may change the format of job postings or their structure, necessitating frequent modifications to scraping programs.

What are the Future Trends of Job Scraping?

Job scraping is set to become progressively more sophisticated and essential to the hiring and recruiters’ analytics sectors as technology advances. Innovations in big data, machine learning, and artificial intelligence (AI) will influence the future of job scraping. The precision, speed, and scalability of job scraping operations will all be greatly improved by these developments.

Integrating Artificial Intelligence and Machine Learning

The growing application of AI and machine learning in job scraping is the most fascinating trends. By automating repetitive processes, the latest technologies can expedite the scraping process and enhance the quality and relevancy of the data gathered. For instance:

Content Understanding: Job listings' information can be better understood and categorized by training machine learning models. This makes it possible for scrapers to gather more detailed information that might not be specifically stated in the job titles or descriptions, like soft skills, business culture, or job seniority levels.

Data Prediction: Using previous scraped data, AI is able to forecast future employment market patterns. Machine learning algorithms, for instance, can examine the job description of recently advertised positions to spot new trends in job names and skill sets before they are widely accepted.

Natural Language Processing (NLP)

Enhancing the precision of job scraping will be largely dependent on Natural Language Processing (NLP). Users can use NLP to thoroughly examine job descriptions, assisting in the identification of skill needs, industry trends, or even terms unique to a given organization. This will make it possible to collect data more precisely and increase the analysis value of the task data that is extracted.

How AI and ML Revolutionizes Job Scraping?

Job scraping is set to become progressively more sophisticated and essential to the hiring and recruiters’ analytics sectors as technology advances. Innovations in big data, machine learning, and artificial intelligence (AI) will influence the future of job scraping. The precision, speed, and scalability of job scraping operations will all be greatly improved by these developments.

Faster Data Processing

Real-time job market trends may be analyzed due to AI-driven web scrapers, which can process massive amounts of job data far more quickly than conventional techniques.

Error Reduction

By automatically detecting and fixing flaws in data that has been scraped, Machine Learning models can be developed to enhance the dataset's overall quality.

Custom Job Matching

By carefully examining candidate profiles and job descriptions, AI-powered job scrapers are better able to match job posts with qualified applicants. Recruiters will be able to find the most qualified applicants faster as a result.

Conclusion

For companies, recruiters, and analysts seeking to learn more about the labor market, job scraping is an effective tool. Job scraping can yield useful information for hiring strategies, market analysis, and competitive research, whether you are using it to extract job postings from sites like Indeed and LinkedIn or creating unique solutions to extract specialty websites.

Job scraping appears to have an even brighter future as Artificial Intelligence and machine learning evolves. In addition to improving scraping speed and precision, these technologies will allow companies to extract more detailed and nuanced information from job advertisements. Companies must, however, also be aware of the moral and legal ramifications of scraping and make sure they abide by website terms of services and data protection laws.

Data scraping companies like iWeb Scraping offer a variety of alternatives for businesses looking to begin job scraping, ranging from highly tailored frameworks to no-code results. Businesses may effectively utilize job extraction and maintain an advantage in a highly competitive labor market by carefully choosing the appropriate tools and strategies.